Wonderul World of Open Weights AI

Wonderful World of Open-Weights AI

Imagine running a powerful AI model on your own laptop or server, with no external API and complete control. In recent years, a flourishing ecosystem of open-weights AI has emerged, offering software engineers unprecedented freedom to experiment, build, and deploy AI models. This blog post dives deep into the wonderful world of open-weights AI – what it is, why it matters, and how you can get started. We’ll explore the tools, models, techniques, and tips that make up this exciting landscape.

📣 This post is based on my talk of the same title at Google Developers Group Melbourne (March 2025). You can find the complete demo code and slides in the GitHub repository.

What Is Open-Weights AI?

Open-Weights AI refers to AI models whose trained parameters (“weights”) are openly available for anyone to download and run. In other words, you’re not limited to calling a remote API – you can actually obtain the neural network itself and deploy it locally or on your own infrastructure. This is in contrast to closed models (whose weights are proprietary or hidden behind an API). Key characteristics of open-weights models include:

- Access to Model Weights – You can download the actual model files (often from repositories like Hugging Face or model websites) and load them into your environment. This means you own a copy of the intelligence, rather than renting it via cloud API.

- Local Execution – Since you have the weights, you can run inference on your own hardware (laptop, desktop, server, etc.) without internet connectivity. Your data stays local.

- Modifiability – Open-weight models often allow fine-tuning or modifications. You can adapt the model to your needs.

- Community and Transparency – These models are usually released by research labs or companies with some level of openness (though licenses vary). Often the architecture and training details are published, which fosters community contributions and understanding of how the model works.

Note: “Open-weights” doesn’t always mean fully “open-source” in the strict licensing sense – some models have usage restrictions. But generally it means the weights are downloadable and usable for at least research or personal purposes. The trend towards open-weights AI has accelerated since the release of models like Meta’s LLaMA, which sparked an entire movement of publicly available large language models (LLMs).

Why Open-Weights? (Privacy, Control, Customization)

Open-weight AI models offer a host of benefits for developers and organizations. Here are some major advantages:

- Privacy: Because you run the model locally or on your private server, your data never leaves your environment. This is crucial if you need to analyze sensitive documents or user data.

- Cost Efficiency: Running models locally can save costs in the long run. There’s an upfront cost for hardware and initial setup, but you won’t be paying per-query fees.

- Innovation and Community: The open-weight ecosystem is brimming with innovation. New models and techniques are shared openly, and developers worldwide contribute improvements (from optimization tricks to new fine-tuned variants).

Running Open-Weight Models

- Ollama – Ollama is a popular open-source tool that acts as a lightweight framework and package manager for local LLMs. It lets you easily download and run models with simple commands. Ollama is actively maintained and has a growing community. It offers a solution with a CLI and even a built-in API server mode. For example, once you install Ollama, you can run a model with a single command:

# Download and run an open-weight model, gemma3, locally using Ollama:

ollama run gemma3:1b- Open WebUI – Formerly known as Ollama Web UI, OpenWebUI is an extensible, self-hosted web interface for LLMs. It provides a ChatGPT-style chat interface that you can run in your browser, but entirely offline, connecting to local models.

In addition to these, there are many other helpful tools and libraries. For instance, llama.cpp, llamafile, LM Studio Hugging Face Transformers etc.

Diverse Capabilities

Open-weight models offer far more than just text generation.

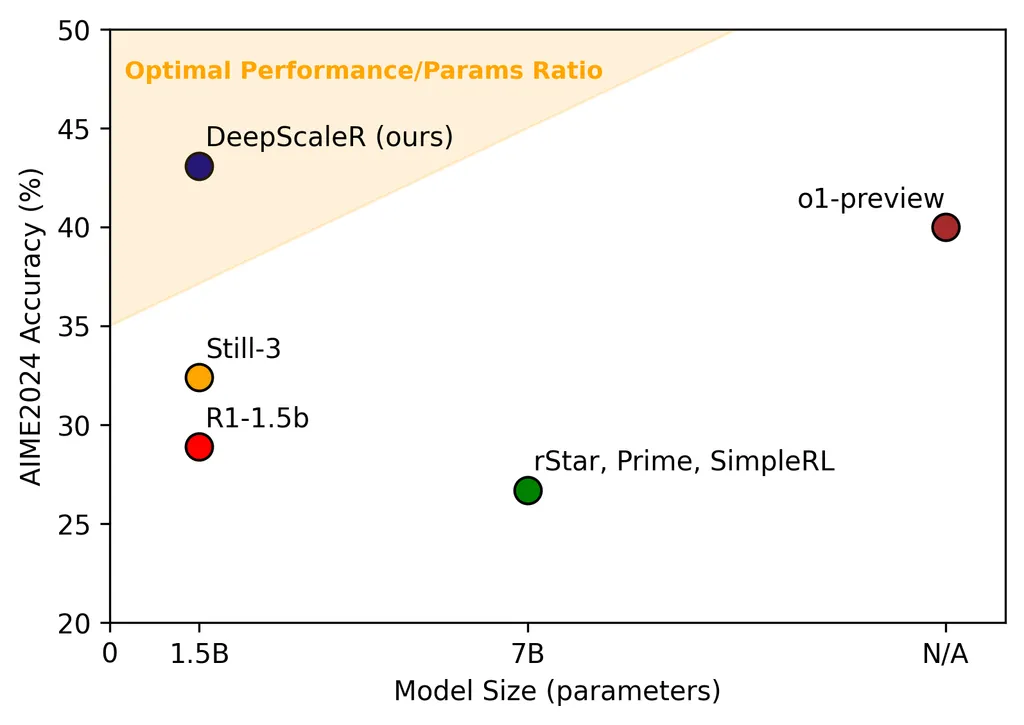

DeepScaler 1.5B demonstrates impressive math abilities, even outperforming larger models on benchmarks like GSM8K and AIME.

Uncensored models, such as Dolphin-Mistral, grant users complete freedom in uncensored content generation, making them versatile for exploratory projects. Additionally, models like Gemma 3 (27B) support multimodal interactions, enabling tasks like image captioning and complex visual reasoning. With tool-enabled models, developers can also build fully private, intelligent local agents capable of automating tasks without relying on cloud services.

Simple Projects to Get You Started

If you’re looking for practical ways to start experimenting with open-weight models, there are several engaging projects you can tackle. Let’s explore some concrete examples that showcase the power of local AI:

CLI Assistant

Create a command-line interface assistant that can help manage your daily tasks. Using models like Mistral or CodeLlama, you can build a tool that understands natural language commands and helps you navigate your system, write scripts, or debug code. For example, you could ask it to “find all Python files modified in the last week” or “help me write a regex pattern for email validation.”

Financial Analysis

Build a private financial analysis system that processes your bank statements, investment reports, and financial documents locally. Using models like Llama-3, mistral-small or Gemma3, you can create a system that analyzes spending patterns, generates budget recommendations, or performs risk assessments without exposing sensitive financial data to external services. For instance, you could ask it to “analyze my spending patterns from last month” or “generate a budget forecast based on my historical data.”

Personal Knowledge Base

Transform your notes and documents into a searchable knowledge base. By using open-weight models to index and understand your content, you can create a system that answers questions about your personal documents using natural language. For instance, you could ask “What did I write about Python decorators last month?” or “Summarize my meeting notes from last week.” This is especially powerful when combined with vector databases for efficient retrieval.

Running Open-Weights Models

One of the beautiful aspects of open-weight AI is that you can deploy it on a wide range of hardware. Let’s talk about infrastructure – from tiny devices to cloud servers – and what to consider for each:

-

MacBooks (Apple Silicon): Modern MacBooks (with M1/M2/M3 chips) are surprisingly capable for AI. Thanks to the Metal API support in libraries like llama.cpp, a MacBook Air can run a 7B model comfortably, and a MacBook Pro with more RAM/GPU cores can handle 13B or even 30B models (in 4-bit quantized form) with decent speed. If you’re a developer on macOS, you likely can get started immediately – many tools (Ollama, llamafile, LM Studio) have native Mac support. The key consideration is memory: ensure you have enough RAM, as the model (or a quantized version) will occupy a chunk of it. Apple’s unified memory means if you have, say, 16GB RAM, that’s also the VRAM for the GPU. Running a 7B model in 4-bit might use ~4GB, which is fine.

-

Gaming PCs / Workstations: This is the “enthusiast” sweet spot. If you have a decent GPU (say an NVIDIA RTX series card) with 8GB, 12GB, or more VRAM, you can run quite powerful models locally. For example, an RTX 4090 (24GB VRAM) can comfortably run a 70B model in 4-bit mode, or a 13B model in full FP16 with room to spare – delivering multiple tokens per second. A mid-range card like RTX 3060 (12GB) can load a 30B 4-bit model. The advantage of a GPU is speed; the disadvantage is higher power usage and some setup complexity with drivers. Don’t forget the CPU and RAM in these systems too: if you plan to use CPU inference, a high-core-count CPU (e.g., AMD Threadripper or Intel i9) can help churn through tokens faster, and plenty of system RAM is needed to hold the model if not offloaded to GPU. As an engineer, using your gaming PC for AI experiments is often the fastest path to iterate on ideas without waiting for cloud GPU instances.

-

Servers with GPUs: If you’re thinking of deploying an open-weight model for multiple users or as a service, a server-grade GPU (or multiple GPUs) is ideal. NVIDIA A100s or H100s are the gold standard (with 40-80GB per GPU, you can run huge models or even serve multiple models). Many open models are used in enterprise settings by loading them on such GPUs and exposing an API internally. One big consideration on servers is scalability: you might run into situations where you want to handle many requests in parallel. This is where optimized inference servers (like vLLM, HuggingFace Text Generation Inference, or custom solutions) shine – they can batch incoming requests and maximize GPU utilization. If using multiple GPUs, some frameworks can split the model across GPUs (tensor parallelism) to handle models larger than a single GPU’s memory.

-

Cloud Providers: There are also cloud providers who specialize in hosting open models; they give you an endpoint for an open-weight model of your choice, which could be a convenient way to deploy if you don’t have physical hardware. However, deploying yourself on your own server might be more cost-effective and keeps you in control of updates and data flow.

From a tiny Mac Mini to a multi-GPU cloud instance, open models give you the flexibility to deploy AI where it makes sense for you. Start with what you have (even a laptop) – you’d be amazed that you can run a powerful agent on an airplane with just a laptop battery and an open model. Then, scale up to bigger machine as your needs grow.

Integrating and Building Applications

The bottom line is: using open-weight models in real applications is getting easier and more standardized every month. Whether you integrate via a high-level library like LangChain or direct calls to an API served by your model, you won’t be starting from scratch. In many cases, you can reuse patterns from using OpenAI/closed models, but now with the freedom to choose or customize the model backend.

Tips for Getting Started and Succeeding with Open-Weight AI

To wrap up, here are some tips and guidance for software engineers who want to dive into building with open-weight AI models:

- Start Small and Simple: If you’re new to running models, begin with a smaller model (2B-7B parameters) and a straightforward tool like Ollama or LM Studio. This will let you validate your environment and see results quickly. You can always scale up to bigger models once you’re comfortable. Even a 7B model can be impressive for many toy applications.

- Choose the Right Model for the Job: With so many models out there, selection is key. Read up on model cards and evaluations. For a coding assistant, try a code-specialized model (e.g., StarCoder or CodeLlama); for a general chatbot, maybe Gemma3 or DeepSeek-R1; for multi-language support, consider something like Qwen or Yi. Also pay attention to licenses – if you’re doing a commercial project, ensure the model is allowed for commercial use (many are, but some like LLaMA2 have a license with acceptable use policy).

- Stay Updated: The open-weight AI field moves fast. New models, better fine-tunes, and improved tools come out literally every week. Join communities (Reddit’s r/LocalLLaMA, Discord servers for projects like Ollama or Hugging Face forums) to hear about the latest. Upgrading from an older model to a new one can give a big boost (for example, many who used Llama-2-7B upgraded to Mistral-7B when it came out and saw immediate quality improvements). Also, updates to libraries can improve speed (llama.cpp had huge speedups over time). So keep your setup reasonably fresh.

Conclusion: Join the Open-Weight AI Adventure

We are living in a time where AI is truly democratizing. Open-weight models mean that the barriers to entry for sophisticated AI have lowered dramatically. As a software engineer, you no longer need to work at a big tech company to access world-class AI models – you can download one and run it yourself. This opens up endless opportunities to build, experiment, and solve problems.

From ensuring user privacy, to customizing AI for niche tasks, to deploying on hardware you own, the benefits of going the open-weights route are compelling. The ecosystem (tools, models, communities) is rapidly maturing, making it easier than ever to get started.

Now it’s your turn. Have an idea for a killer app or a burning curiosity about how a model would handle your unique dataset? Give open-weight AI a try. Spin up Ollama or LM Studio, grab a model that suits your needs, and start hacking.

The wonderful world of open-weights AI is waiting for you. Dive in, explore, and build something amazing with it. Together, the community of open AI enthusiasts is shaping a future where AI is more accessible, transparent, and in the hands of builders like you. Happy coding, and welcome to the adventure!